AI navigation system for living donor surgery: seven process classification of laparoscopic nephrectomy by deep learning

Masato Nishihara1, Noriyuki Masaki1, Ichiro Nakajima2, Tamotsu Tojimbara3, Makoto Tonsho1.

1Transplant Surgery, International University of Health and Welfare, Mita Hospital, Tokyo, Japan; 2Kidney Surgery, Tokyo Women's Medical University Hospital, Tokyo, Japan; 3Transplant Surgery, International University of Health and Welfare, Atami Hospital, Shizuoka, Japan

Introduction: Laparoscopic surgery for living donors is operated for a non-disease person, thus establishing navigation system is necessary for high safety. Since the algorithm for process classification of laparoscopic donor nephrectomy can be used for self-location estimation, this study is aimed to develop this algorithm by Deep Learning, assessing an ability of Artificial Intelligence to learn and recognize surgical scenes obtained from videos of laparoscopic donor nephrectomy.

Materials and Methods:

Dataset

High definition videos of laparoscopic left donor-nephrectomies were collected for this analysis. All cases were conducted by a single operator in a same manner. The scenes in each video were divided into seven processes (P0-6) and manually annotated for surgical transition: (P0) retroperitoneal dissection, (P1) exposing lateral and lower pole of the kidney, (P2) periureteral dissection, (P3) exposing anterior and posterior of the kidney, (P4) hilar dissection, (P5) cut of ureter and (P6) stapling and cut of renal artery and vein. The frame rate of each video was 30. The videos were converted into frame images and each image was annotated, following the video annotation label.

Training

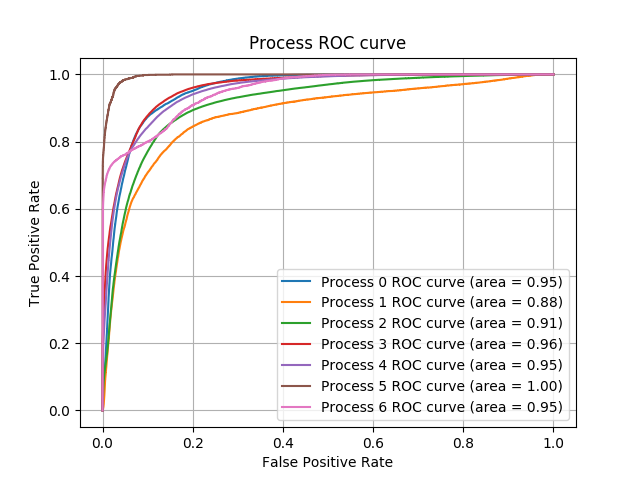

EfficientNetB3 was used as a base Deep Neural Network, modified for binary classification, and trained on 6 videos for each process. The parameters were tuned on 2 videos as validation dataset and the algorithm was tested on 2 videos as test dataset. The accuracy was assessed with AUC (Area Under the ROC Curves). As a result, 7 algorithms were developed for prediction of each process, from P0 to P6.

Results: The label AUCs (Area Under the Curves) of the ROC curve of P0 to P6 were 0.951, 0.879, 0.914, 0.957, 0.949, 0.995, 0.951, respectively (Figure). The average of AUC was 0.942.

Discussion: High accuracy produced even out of a small number of training datasets might be associated with little surgical and anatomical variations of the laparoscopic donor nephrectomies. Increasing the dataset and improved algorithms such as ensemble methods will be essential for higher accuracy.

Conclusion: We demonstrated an ability of Artificial Intelligence for laparoscopic living-donor nephrectomy. This result implies that the navigation system of Deep Learning can be applicable to future surgical assist systems to ensure safety for living donors.

There are no comments yet...